NVIDIA GeForce GTX 670 Review - Adaptive VSync, GPU Boost & More

GPU Boost & Adaptive VSync

The great thing about competition is that it brings out the best in good competitors. AMD has offered product improvements in power consumption with features such as Long Idle and ZeroCore. With the release of the 600-series, NVIDIA has added two new performance features - GPU Boost & Adaptive VSync.

With the Radeon HD 7900 series, AMD implemented its Overdrive feature. This feature allows the user to increase the max power draw to the card, giving a boost for overclocked performance (if the application or game needs the boost). If the game or app didn't need the increased performance, it wouldn't pull the extra power draw; saving energy and heat. NVIDIA now offers a similar feature with GPU Boost.

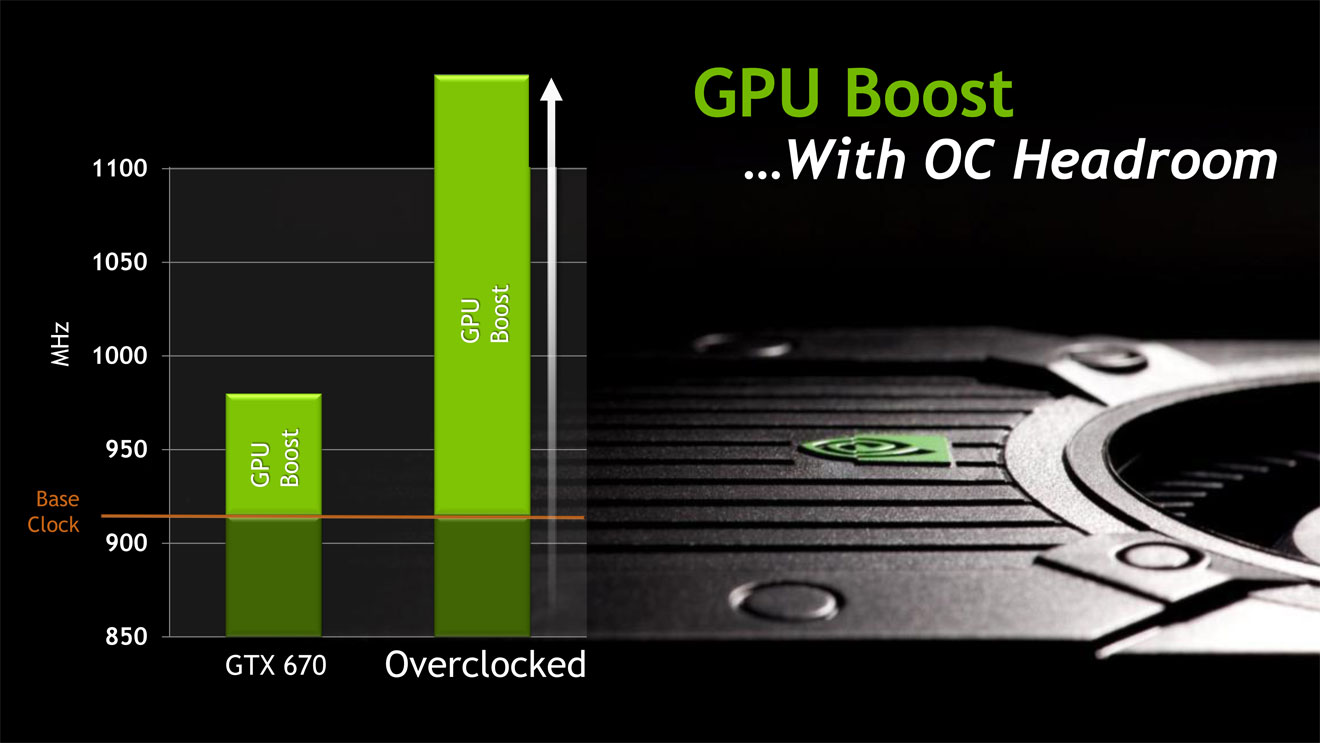

Rather than offering a driver setting for dynamic overclocking based on power draw, the NVIDIA hardware itself comes with the built-in feature for dynamic overclocking. The NVIDIA software (driver) and hardware work in conjunction to measure 13 different data points regarding performance. It dynamically determines when an increase in performance is needed, and boosts the card's clock frequencies as much as needed (or possible).

In reality, the "stock" clock of an NVIDIA GTX 670 is now its base clock. The cards are then specced with a specific average and max boost. There is now no real way to measure an NVIDIA card at "stock" clocks. We can simply note the base clock, and the boost range.

Along with this performance boosting feature, NVIDIA is also offering an intriguing and unique "limiter" - Adaptive VSync. Traditional VSync is used to sync the framerate of a game with the output of a monitor. This is traditionally useful to reduce "tearing" that appears when game performance outstrips the monitor refresh rate. In a sense, the game gets ahead of the monitor.

With VSync disabled, you get an experience that can be described as bi-polar ("hurry up and wait", or possibly "creeping then running"). The user ends up being rubber-banded between these extremes of performance, and the gameplay experience is very poor.

The problem with VSync is that it forces the game to run at a multiple of the monitors refresh rate (usually a multiple of .5x). So, what happens if a game generally runs at 40-50fps, with a few occasional spikes well above the 60Hz refresh? VSync will limit the high-end of the extreme, and cap performance at 60fps. This provides a more consistent and enjoyable experience, even if the average fps is actually lower. The problem is that since the 40-50fps sections don't meet the 60fps threshold, they are artificially dropped to 30fps.

Adaptive VSync allows the game to perform at its real framerates below the 60fps mark, but then caps anything above at 60fps. This provides the best of both worlds, the smoothest possible performance, and the best perceived experience. High performing sections will be capped at 60fps, but then area that fall below will run at 45, 50 or 55fps, rather than all being cut to 30fps.

Taken together, GPU Boost and Adaptive VSync allows the GTX 670 to work as hard as it can to hit 60fps (by boosting clocks in stressful areas), and then capping that performance at 60fps (and lowering clocks back down) in easy sections.

FXAA & TXAA

The world of removing aliasing has certainly gotten rougher over the last few years. No longer do we just have "simple" methods (and "simple" being a relative term) such super-samling or multi-sampling which work at the pixel-level.

AMD recently added its own shader-based method, called MLAA (MorphoLogical AA). MLAA moves the AA calculations to the post-processing phase of the image pipeline (rather than the initial render). Beyond possible performance improvements, implementing AA as a post-process filter meant that virtually any "modern" title could benefit from anti-aliasing, even if it wasn't offered in the original code.

NVIDIA has countered with two newer AA methods of its own - FXAA and TXAA. FXAA (Fast Approximate AA), and is a single pass pixel shader that also runs as a post-processing effect. As with AMD's MLAA, NVIDIA now has an option in their control panel to force FXAA for any game. The expectation is that you can get 4xAA image quality with no performance hit. This would certainly be appealing to Surround users who need to squeeze out every frame per second.

TXAA is the second custom offering from NVIDIA. it is a combination of hardware multi-sampling and custom shader AA code. The expectation is that you can get 8xAA image quality on object edges, but only see a 2xAA-level performance hit (which can often be minimal). For a 4xAA-level performance hit you can get "better than 8xAA" image quality.

The downside is that TXAA has to be implemented specifically in a title. It is being supported by developers such Crytek and Unreal, for their respective engines. This will hopefully make adoption widespread. It is also being adopted for specific titles such as Mechwarrior Online, Eve Online and Borderlands 2. With BL2 launching very soon, I will look to putting together an overview of all the different AA options available.