NVIDIA GeForce 9800GX2 Review

Like most people, I am unable to upgrade my whole rig every year. While faster CPUs, motherboards and GFX cards come out every year - if not every six months, my wallet (and wife) dictate that upgrades come in cycles. GFX this year, CPU next year, and maybe the mobo (and probably RAM) some time after that. And, I've never upgraded my GFX at each generation. I skipped both the 6-series and the 8-series along the years.

Like most people, I am unable to upgrade my whole rig every year. While faster CPUs, motherboards and GFX cards come out every year - if not every six months, my wallet (and wife) dictate that upgrades come in cycles. GFX this year, CPU next year, and maybe the mobo (and probably RAM) some time after that. And, I've never upgraded my GFX at each generation. I skipped both the 6-series and the 8-series along the years.

So, as my rig currently stands, it's not the speediest rig at the WSGF (that title belongs to Paddy). But, I do think that it is reflective of many readers, who (unlike most "professional" reviewers) don't get to upgrade to cutting edge each January.

I received my original GeForce 7950GX2 and ASUS nForce 590 motherboard (P5N32-SLI Premium/WiFi-AP) almost two years ago, when the 7950 was just launching. Almost a whole year would go by before I was able to put together my entire rig. At that time the E6700 was at the top of the Core2Duo list, but my nForce 590 motherboard was now a generation behind. With only a 800MHz FSB, I settled on 4GB of Corsair XMS2 DDR2 800 memory.

With this upgrade to a GeFore 9800GX2, my GFX is again top of the line, my CPU is "last gen" (with Quad Cores now standard), and my RAM/mobo is two generations old (with the nForce 680 and 790 both crossing into 1333MHz on the FSB).

As of now, my rig stands at:

- GeForce 9800GX2 Reference Board from NVIDIA at 600MHz Core and 2000MHz Memory

- P5N32-SLI Premium/WiFi-AP

- Intel E6700 Core2Duo at 2x2.667GHz

- 4GB XMS2 Corsair RAM

- 2x Samsung 320GB T-Series HDD (one for the OS and games; one for swap file and FRAPS)

- LiteOn SATA Optical Drive

- Sound Blaster X-Fi XtremeGamer Fatal1ty Pro

- Enermax Infiniti 720W

- Lian-Li Black PC-777

- Dell 3007WFP

- Matrox Digital TripleHead2Go

- 3x HP LP1965

- Logitch G15 Keyboard & G5 Mouse

I'd like to upgrade the CPU to a Quad Core, but a Q6600 probably wouldn't give much "real world" improvement, and anything else would require a mobo and RAM upgrade to keep the FSB on the CPU and RAM in sync. At this point, I will probably just wait for Nehalem. And, considering the benchmarks I hit with the 9800GX2, waiting won't be difficult.

Full Disclosure

NVIDIA is a sponsor/supporter of the WSGF, and as part of their support they supply hardware to the WSGF. Some I've kept to build/upgrade my gaming rig, and some I've given to Insiders. NVIDIA supplied both GX2 video cards, as well as the key components of my gaming rig, including the CPU, RAM and motherboard. Their support of the WSGF hasn't influenced this review. The benchmarks ran, and the numbers are what they are. Remember, I'm comparing two products from NVIDIA; this isn't a head-to-head vs. ATI.

Installation

The installation was relatively painless, and the issues encountered had nothing to do with the GeForce 9800GX2. Before swapping video cards I ghosted my drive with a clean install of Windows XP SP2 I keep in reserve, and updated everything with the latest patches and drivers. I had held off for SP3, but when it was delayed I pressed on anyway. I had also downloaded the latest ForceWare 175.16 WHQL drivers. I had the 169.21 installed before the swap, as that was the latest version for the 7900GX2.

I pulled out the old card (which required a 6-pin molex), and installed the new one (which requires both an 8-pin and a 6-pin for power). My Enermax power supply has modular cables, but included one 6+2 pin molex cable in the "main bunch" that is hard wired in. To power the 9800GX2 I had to dig out one of the 6-pin cables and add it into the rig. I ended up having to remove the power supply to get the cable plugged into it. This is due to my power supply and case configuration, and has nothing to do with the GeForce 9800GX2.

Upon reboot I was greeted by ugly 640x480 Windows (stretched across my TH2Go). I cancelled out of Windows' attempt to install drivers for the 9800GX2 and ran the setup on the 175.16 WHQL. One reboot later, and everything was perfect.

NVIDIA GeForce 9800GX2 Review - Benchmarking

I don't know exactly how other sites and magazines test hardware. But, I do know that they do it as a full-time job, and have a lot more equipment and time at their disposal. They are also "on the hook" to provide a consistent testbed for products from different companies, and to provide a consistent testbed across weeks and months. I can assure you that my testing methods weren't as stringent as those reviewers, but I do believe they reflect a real-world look at the hardware.

As mentioned earlier, I ghosted a drive with a clean install of WinXP SP2. I then updated that install with the latest Windows patches (just prior to SP3), and the latest drivers and BIOS from ASUS (mobo), NVIDIA and Creative Labs. I then installed every game that I was considering for this benchmarking exercise. Then, I began the benchmarking.

I didn't go through and strip Windows down to a limited set of drivers and services. On the contrary, I had a "normal" (but clean) install of Windows running, along with my "normal" run-down of programs such as Steam, AVG Anti-Virus, X-Fire and FRAPS.

Also, unless I needed to, I didn't restart the game and/or the machine during each test. Some games, like Oblivion, require a restart to change the resolution; and some games like World in Conflict showed diminishing numbers between runs, if the machine wasn't rebooted. But, for games like LOTRO and Bioshock, I simply ran one test after the other. Details on each benchmark process is outlined for each game.

Also, everything was run at stock clocks and speeds. Nothing is overclocked.

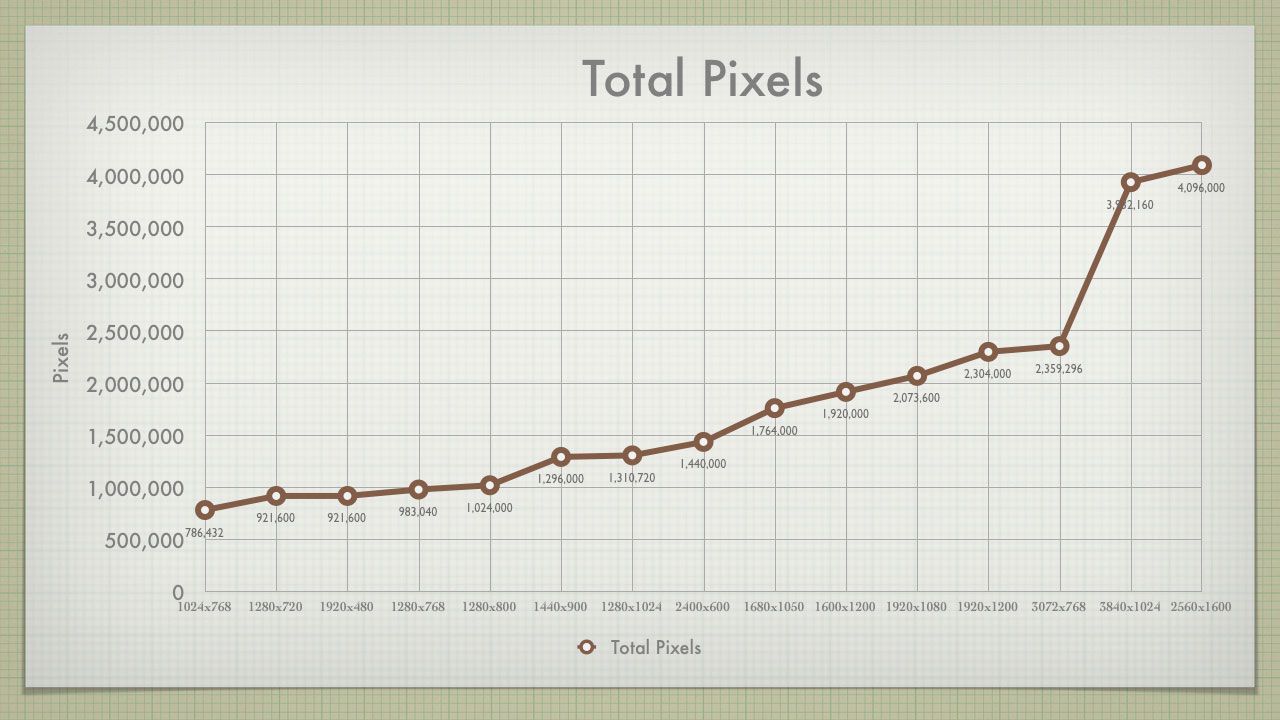

Total Pixel Counts

This graph shows the total pixels at each resolution. The resolutions are listed by the total pixel count. I have included all the common 4:3, Widescreen and Surround Gaming resolutions. The resolutions covered are:

- 5:4 - 1280x1024

- 4:3 - 1024x768, 1600x1200

- 16:10 - 1280x800, 1680x1050, 1920x1200

- 15:9 - 1280x768

- 16:9 - 1280x720, 1920x1080

- Surround - 1920x480, 2400x600, 3072x768, 3840x1024

Looking at the graph, there are four "areas" of pixel count. There are:

- < 1M: These are the resolutions with less than 1M (1,024,000) pixels. This covers the resolutions between 1024x768 and 1280x800. These are all considered very low end resolutions, and covers most of the 720p HDTV world

- 1M - 1.5M: This small band covers 1440x900 - 2400x600. This is the low end of mainstream. The first "stand alone" widescreen resolution is here (1440x900), as well as the venerable 5:4 LCD resolution of 1280x1024.

- 1.5M - 2.5M: While the "slope" of the first two bands was relatively flat. Graphing these resolutions shows a more rapid increase in the pixel count. This covers the more mainstream resolutions of 1680x1500 and 1920x1200, as well as 1080p HDTV.

- 4M: This is the ultra high-end. This range covers the high end of Surround (3840x1024) and Widescreen (30" 2560x1600). Both of these resolutions clock in right at 4M pixels.

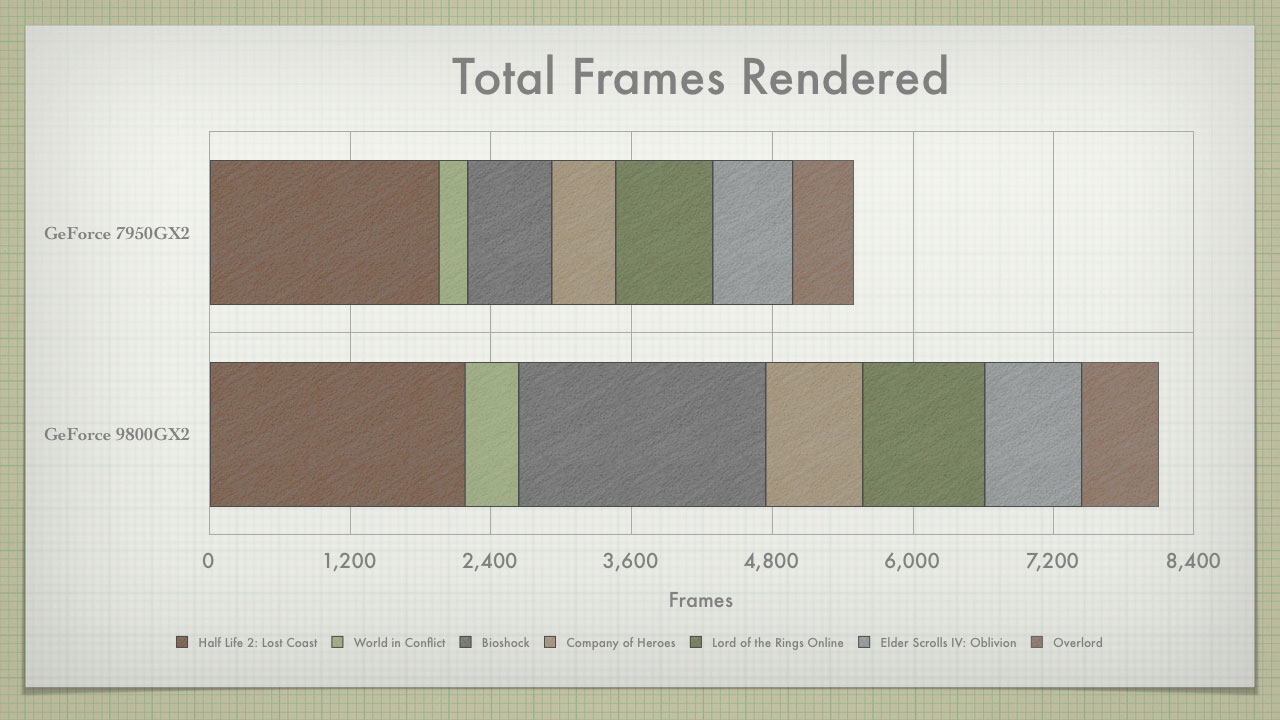

Total Frames Pushed

I saw this type of chart used somewhere else, and I thought it was interesting. This shows the total about of (average) frames pushed though each game, at each resolution. These charts show that the 9800GX2 delivers a sizable performance increase over the 7950GX2 (as we would expect). The first chart shows us that the much of the growth comes in games like World in Conflict and Bioshock, and not from games like Half Life 2.

Looking at the second chart, we can then see where the improvement comes in for each game. We can easily see that Bioshock is seeing significant improvements across the board, but Half Life 2 only picks up significant improvements at the top resolutions. And while World in Conflict only pics up 250 frames in total, it is almost double the previous performance.

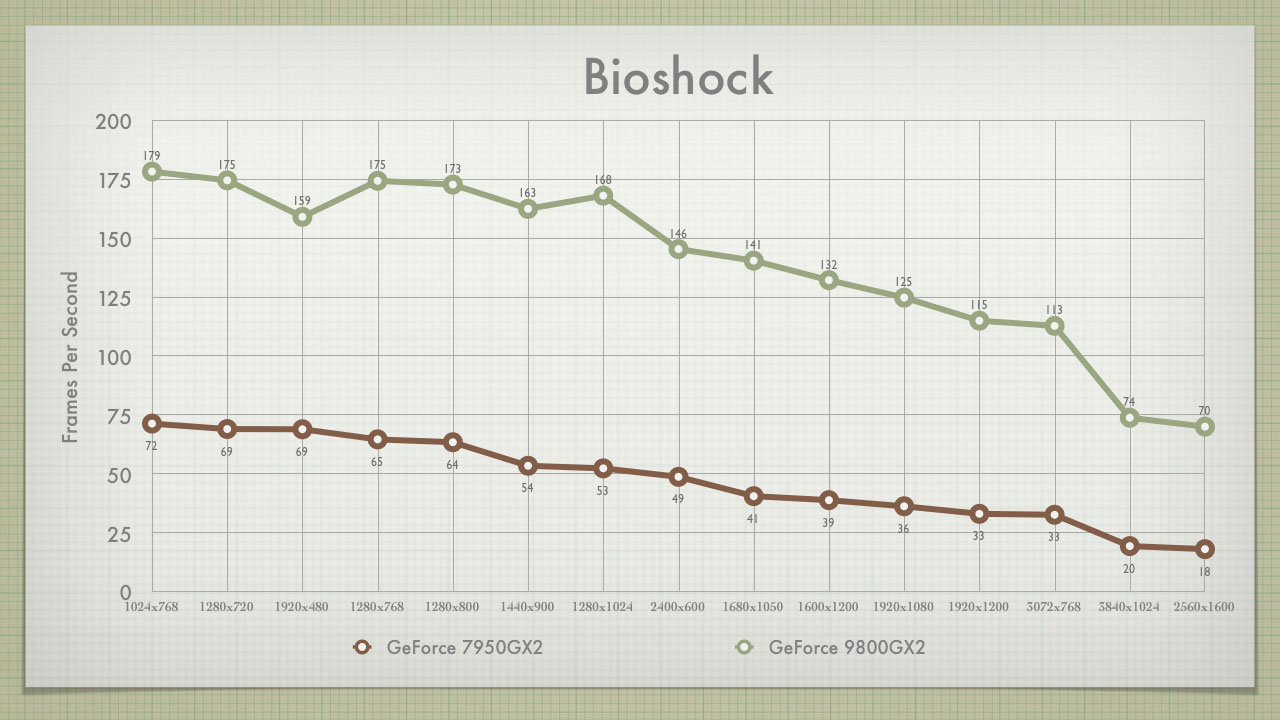

NVIDIA GeForce 9800GX2 Review - Benchmarking Bioshock

Bioshock is a relatively new game, having released in August of 2007. The game itself was lauded for its art direction, and its graphics execution. With the 7950GX2, Bioshock was obviously GFX limited, with a relatively flat, downward-sloping line. It didn't show any of the "dips" for the high aspect ratio resolutions, like those in Surround Gaming. Move to the 9800GX2, and you see a dramatic improvement, and you being to shift the limits from the GFX to the CPU.

With the 9800GX2, we see a dip in fps at 2400x600, the first Surround Gaming resolution. We also see the characteristic uptick at 1280x1024 (the 5:4 aspect ratio is the narrowest available). Performance wise, the 9800GX2 shines across the board. A the low end, increases of 100fps are common, often increasing performance by 1.5x to 2x. The middle resolutions bring the fps increases down to the 80-90fps range, but the 9800GX2 still pushes 110-150fps across that range. In the 4M pixel mark, we see increases to the tune of 50fps (+250%), and we cross the holy grail o 60fps.

All settings were maxed out, and the "Horizontal FOV" was unlocked, making the game Hor+. To test Bioschock, I played the game from the opening crash scene, into the bathysphere, and let the bathysphere take it's ride into Rapture. It was basically from the green loading screen, to the red loading screen. This test scenario provided a consistent gameplay experience, that was suitable for benchmarking. However, it may not be indicative of "real" gameplay.

The opening section in the water had few objects and was largely dark sky (though it did have good fire and water effects), and the bathysphere section had a fixed "viewport" for looking out into the gameworld. This last issue's effect may have been that it limited objects from being in the FOV areas covered by widescreen or Surround Gaming aspects.

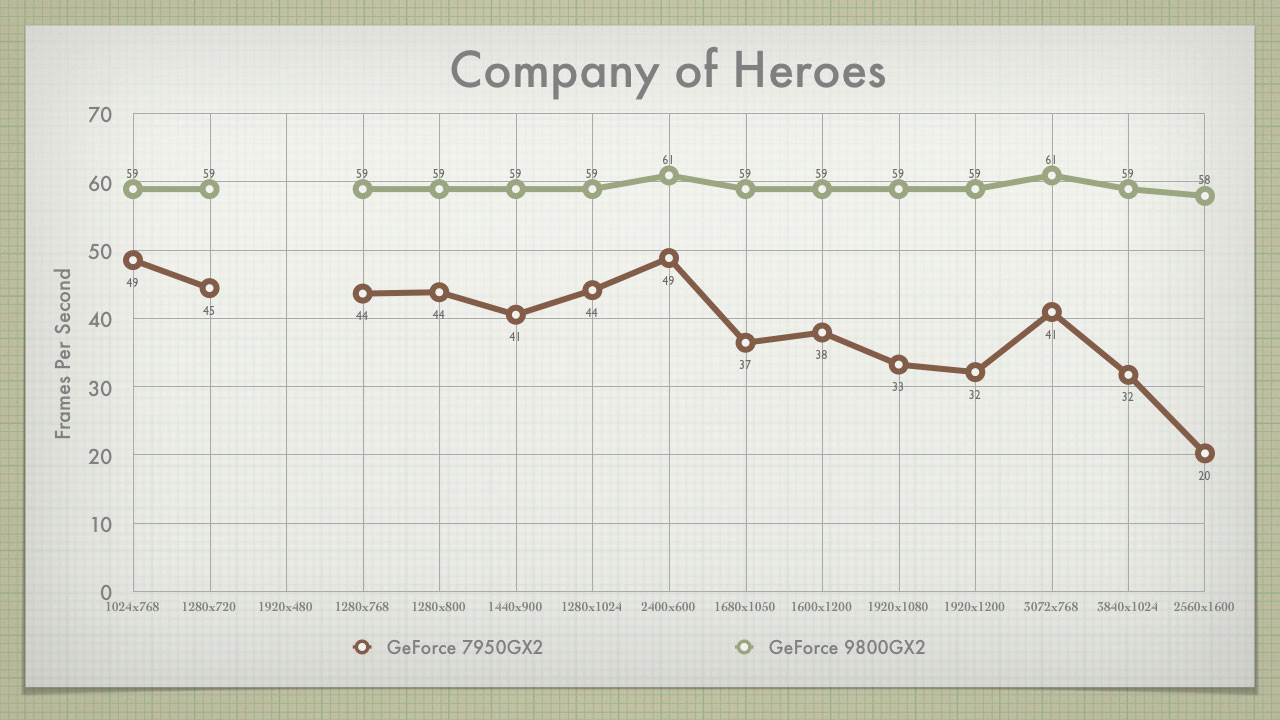

NVIDIA GeForce 9800GX2 Review - Benchmarking Company of Heroes

Company of Heroes includes an in-game stress test as well. It is a "movie" rendered in real-time, using the in-game engine. The movie is anamorphic, and it letterboxes in 4:3 or 5:4, and pillarboxes in Surround. It appears to be locked at 60fps.

The 7950GX2 struggled with the test, and posted diminishing numbers as the pixel count increased. With the 7950GX2, the game is obviously GFX limited.

The 9800GX2 completely turns the table, and the demo maxes out at 60fps across the board. Two of the Surround resolutions did clock in at 61fps. My best explanation for this is that the large black areas (the pillarboxing) helped bump the fps just above 60.

The test was run with everything on max settings. But, considering the limited nature of the test, I don't find it a true benchmark of the 9800GX2's real performance. The 9800GX2 performed significantly better across the board, but I am left wondering how well it truly could have performed.

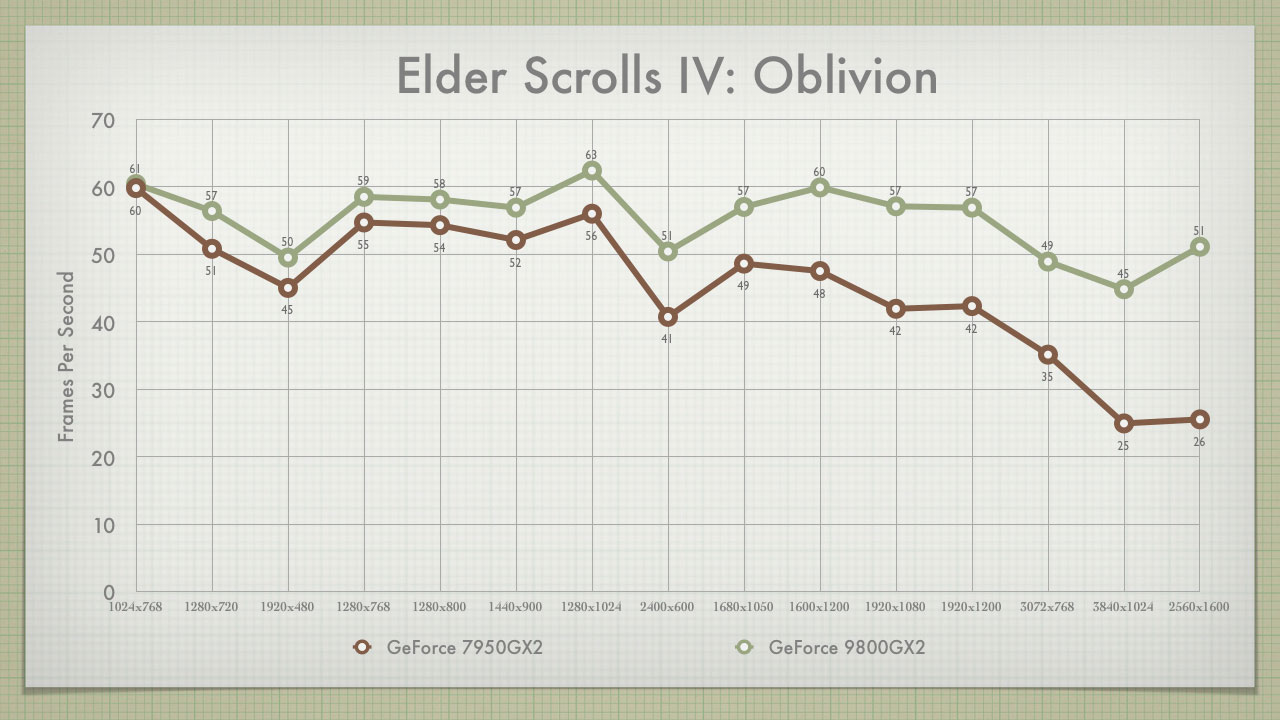

NVIDIA GeForce 9800GX2 Review - Benchmarking Elder Scrolls IV: Oblivion

The 9800GX2 added 4-6fps at the low end, which equates to almost a 10% increase. In the mid-range the performance increase grows, adding 10-15fps. The equates to a 25%-33% increase. The 4M pixel range adds 20-25fps, which is right at a 100% increase. The 9800GX2 certainly improves performance at the higher end, keeping the average frames at a playable 50fps.

But, after tooling around Oblivion for a bit, I have to say I am dissapointed - not in the 9800GX2, but in Oblivion. The game seems to have some sort of 60fps cap on it (after Googling, I found a number of people who think the same thing), and the game "chunks" at the same point every time. Even with the 9800GX2 at the lowest resolutions, I could still bank on the spots where the frame rate would "hitch" and go south of 30fps. That, and I hate wolves. I don't know how many times I had to re-start a run, due to some damn wolf that chased me for miles.

Oblivion is a punishing game, and there are fan-made mods that make it even more so. I installed all of the "highly recommended mods" from Tweak Guide's extensive article on Oblivion (even the 2GB of high quality textures). I then downloaded an end-game save, so I could teleport around to find a good run, where I could put the cards through their paces.

I found a nice long run (about six minutes), that crossed a large lake outside the capitol, then circled through the woods, and ended at a look-out over the same lake (looking at the capitol). This run goes through a tone of foliage and scenery, and I was able to hit some cloud cover that changed the entire light map from normal sunlight to a red hue. That was pretty cool to watch. I still hate wolves, and I don't know why they chase a fully armored knight for what seems like miles, and I don't know why a few swings of a sword doesn't put them down.

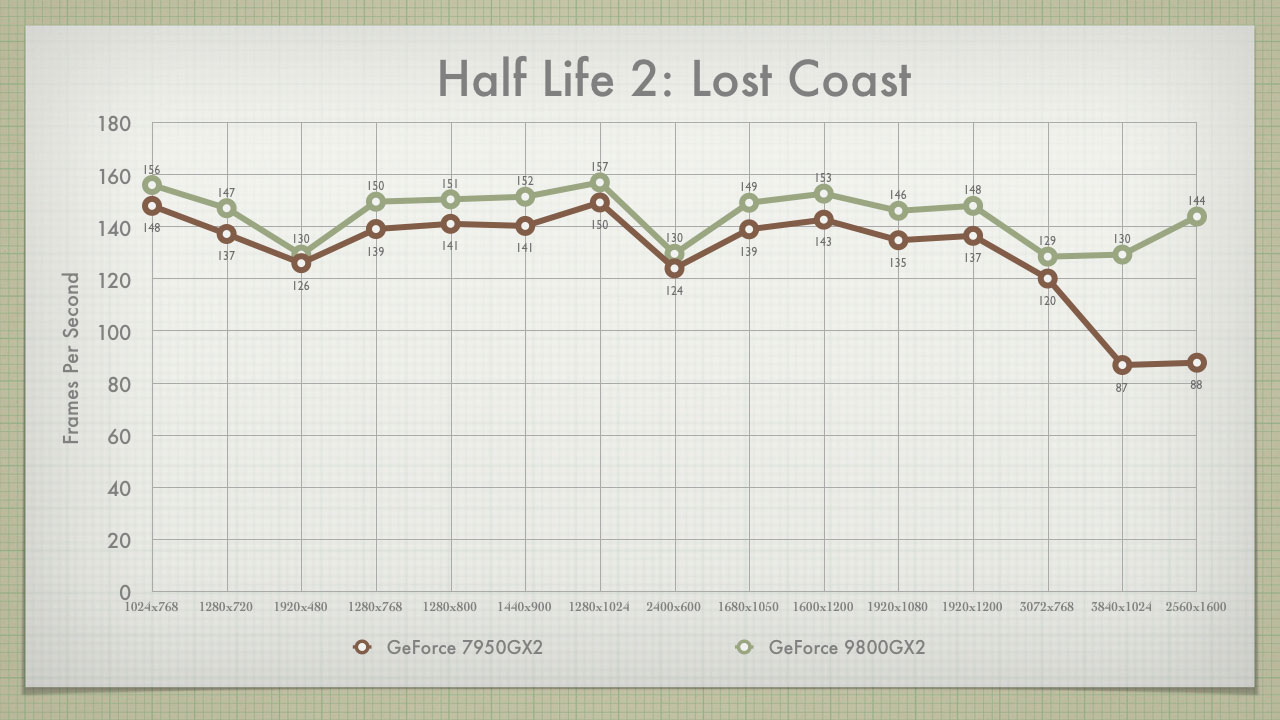

NVIDIA GeForce 9800GX2 Review - Benchmarking Half Life2: Lost Coast

Up through 2.5M pixels, the 9800GX2 gained between 4-11fps. The smallest increases were in the TH2Go resolutions, which all hit 130fps with the 9800GX2. And, all of the TH2Go resolutions hit right at 130fps. So, even though 3840x1024 has over 4.25x the pixels of 2400x600, the performance is dead even.

The 9800GX2 really starts to shine in the 4M pixel area, adding 43fps (+50%) to 3840x1024, and adding 56fps (+63%) to 2560x1600. If HL2 is your game, and you play at less than 4M pixels, the increased performance in between the GX2 generations will be negligible. But, if you are playing at 4M pixels, the performance increase is astounding. With the 9800GX2, Half Life 2 basically becomes CPU-limited.

This test was run using the built-in stress test, in Half Life 2: Lost Coast. It provides a set course through a number of environments, showcasing particle, lighting and water effects. It also showcases HDR, which was added to Half Life 2 with the Lost Coast expansion. All of the settings were maxed out. It was run with Full HDR, 4xAA/16xAF, and V-sync off.

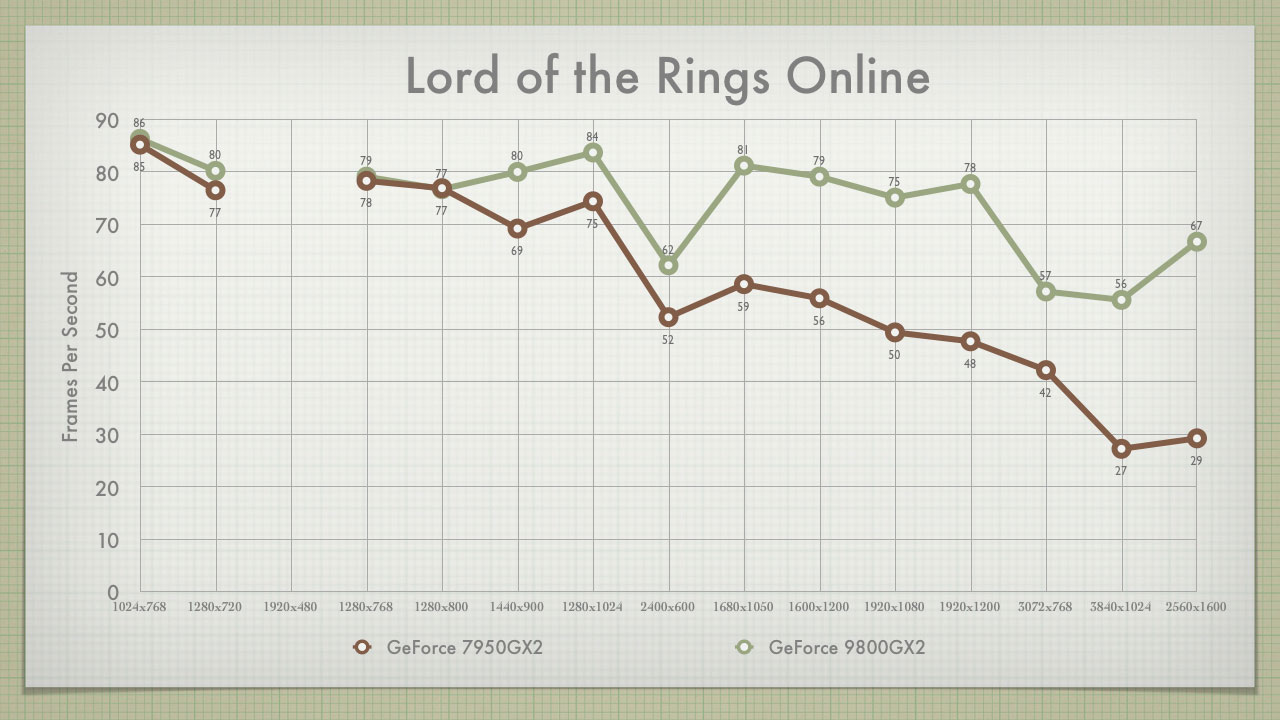

NVIDIA GeForce 9800GX2 Review - Benchmarking Lord of the Rings Online

Lord of the Rings Online is a pretty game, and cranking up the settings only helps to reinforce that. The 7950GX2 posted decent fps across most of the spectrum, especially considering that I had the game running on "Ultra High" settings. With the 7950GX2, the game stayed above 60fps on the low end, and tread between 45-60fps in the middle sections. The high end brought it down to under 30fps. While fps isn't critical for an MMO, this test was purely a "scenery" test, and combat with all of its action and particles would certainly bring the scores down even further.

The 9800GX2 tracked right along with the 7950GX2 in the low end. It started to separate itself at 1440x900, and produced a significant performance gap 1680x1050 and beyond. Between 1680x1050 and 1920x1200 (the mainstream of widescreens), the 9800GX2 added 20-30fps. This represented a 30%-60% increase, basically 1/3 - 2/3 more frames. The performance delta pinched a bit at 3072x768, with the 9800GX2 being hit with the super-wide aspect of Surround Gaming; and considering the 7950GX2 still posted average framerates above 40fps at this resolution. But, performance more than doubled at the 4M pixel mark, with the 9800GX2 averaging 67fps at 2560x1600.

For the test, I tried to find a place where I could make a run, and not be molested by wandering mobs or high traffic. I found a place at the Hunting Lodge, outstide of Archet. This is right outside the starting area in Breeland. I picked a run that would highlight some water effects, along with some foliage. It's not necessarily impressive, but it was repeatable; it still managed to showcase a good performance gap in the cards. The test was run with settings at "Ultra High" with 4xAA. Just to show how punishing the "Ultra High" setting is, when dropping the setting to "Very High," my frames at 2560x1600 jumped from 67fps to 90fps+. That represents a 50% performance increase, with just a slight decline in the visuals.

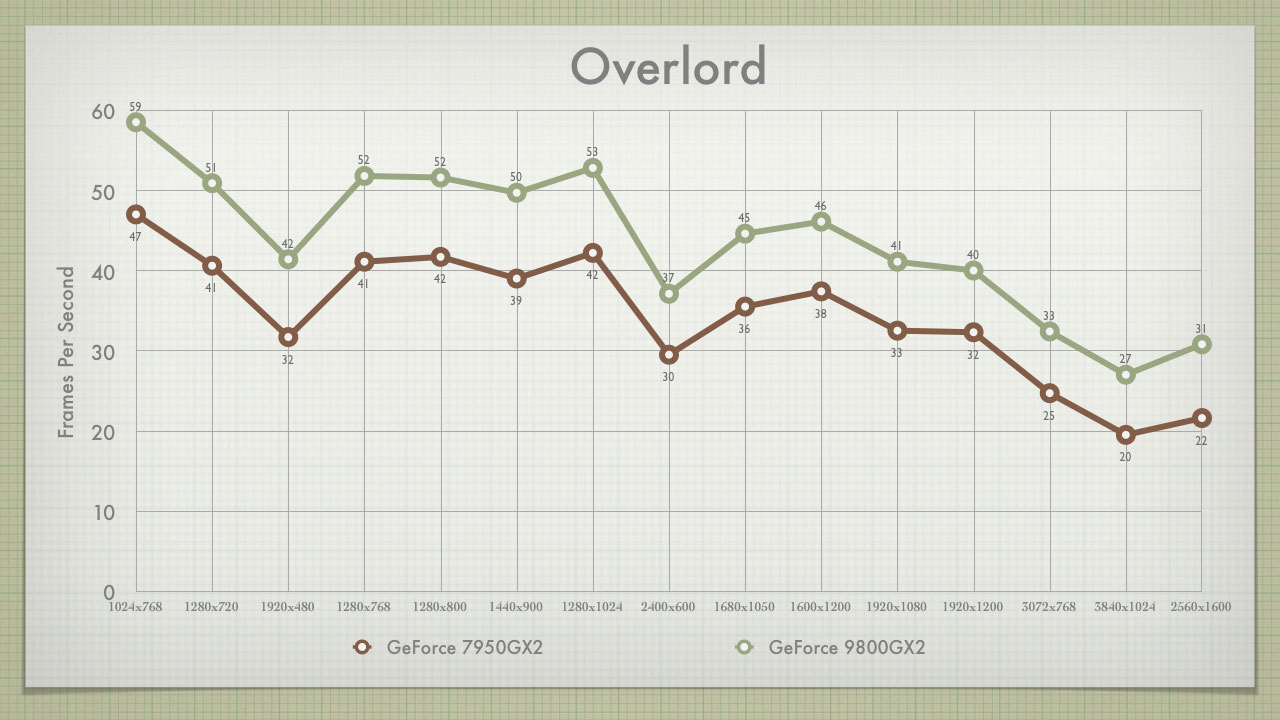

NVIDIA GeForce 9800GX2 Review - Benchmarking Overlord

For me, Overlord is a guilty pleasure. I haven't gotten very far in the game, but I love being the bad guy. I also find Overlord to be a very pretty game. Even though it doesn't have the lifelike foliage of LOTRO, or the mind-numbing amount of textures in Oblivion, it will puts a video card through its paces. Overlord responded to the 7950GX2 about how I would expect, with valleys at the Surround resolutions, a peak at 1280x1024, and a downward trend at the 4M pixel range. What did surprise me is that the game is still demanding enough to be GPU limited on the 9800GX2, even at the low-end of the pixel scale.

The 9800GX2 added 7-12fps across the board. Depending on the original fps from the 7950GX2, this represented a 25% (at 1024x768) to 50% (at 2560x1600) jump in framerates. The two trend lines have virtually the exact same shape, the line for the 9800GX2 happens to be "bumped" up a bit. The 9800GX2 brings the low end of the scale north of 50fps, the middle ground into the 40's, and the high-end to 30fps. All in all, it makes the game much more playable.

I played the game long enough to pass the training and intro mission. This allowed me to enter into the game world and pick a free roaming path. I found a rather short path that allowed me to wind though the countryside, catch a sunset, and some particle effects. Like LOTRO, it's not a long run; but it is long enough to put both cards through their paces.

I ran the game at max settings with 4xAA and V-sync off.

NVIDIA GeForce 9800GX2 Review - Benchmarking World in Conflict

Like Half Life 2, World in Conflict comes with a built-in benchmarking tool. The tool plays a set series of animations, using the game's engine. The 7950GX2 supplied passable (>20 fps) framerates at 1280x1024 and below. Beyond that, framerates plateaued at 15fps and then bottomed out in the single digits at the 4M pixel mark.

The 9800GX2 provides significant improvement across the board, with the frame rate delta increasing as the pixel count grows. At 1024x768, we see a 9fps (+35%) improvement; while at the high end (3840x1024 and 2560x1600), we see a 19fps (+211%) improvement.

No matter what resolution you play at, the 9800GX2 provides a worth while investment. If you play at the higher resolutions, you the bang is better for each buck.

This test was run using the built-in stress test. All setting were maxed out. It was run with 4xAA and V-sync off.

NVIDIA GeForce 9800GX2 Review - Conclusions

The 9800GX2 provides strong performance in at nearly all resolutions (even with my nForce 590 PCIe 1.0 motherboard). The 9800GX2 is a very capable card, especially at the 4M pixel mark. It allows for easily playable frame rates at the highest of resolutions, and at the highest quality settings. I feel comfortable assuming that if you are considering this kind of investment, you've already made an investment in either a large monitor or a Surround Gaming setup. The 9800GX2 isn't the top performing setup in NVIDIA's product line. As of writing this, the crown goes to a pair of 9800GTX's in SLI.

The landscape is a little muddier than it was in the days of the 7950GX2, due to the realization of Tri-SLI. You can use two 9800GX2s for Quad SLI, but Tri-SLI allows you to chain together three cards. This adds a whole other dimension to the cost/benefit analysis. SLI always allowed you to increment you way into better performance. Spend big money on the first card now and then grab the 2nd as prices drop. With Tri-SLI, you can now do the same in three steps, making the last addition very cost effective.

Current thoughts seem to be that Tri-SLI and Quad SLI are overkill right now, at least for the price. There are some upcoming developments from NVIDIA that may make use of the left over processing in Tri and Quad SLI. NVIDIA bought Aegia and their PhysX technology, and they are adding PhysX processing to their 8-series and 9-series cards though a software update. If there is still headroom in Tri-SLI or Quad SLI, then PhysX might be the key to filling it. This idea makes a lot of assumptions: 1) the PhysX implementation comes out in a timely manner, 2) the PhysX implementation works effectively on the GPU, 3) the PhysX processing is able to capture "excess" processing cycles without impacting GFX performance, 4) the games you play support PhysX, and 5) you personally care about physics processing in general. These are a lot of assumptions, and only time will tell how it all settles out. But, this does set up the potential for greater value from an existing or potential purchase.

The 9800GX2 also provides a good upgrade path for someone with an older machine that wouldn't be capable of Tri-SLI, or you prefer a motherboard chipset that doesn't support "traditional" SLI. Grab a 9800GX2 now to get SLI performance, and then pick up another 9800GX2 at a later date (if Quad SLI is no longer "overkill"). No doubt about it that NVIDIA has a strong (if not somewhat confusing) product line, and the 9800GX2 is a serious improvement over the 7950GX2 - in terms of both performance (as expected) and stability. If you have reason to need SLI in one card, the 9800GX2 is a solid performer.

If I had one real "hit" on the card, I would have liked to see the GX2 be a 1.5GB or 2GB card. Getting 768MB on each GPU would help performance in games with large amounts of textures. The single cards moved up in memory, and I wish the GX2 would have followed suit. Having SLI with only 512MB of addressable memory is let-down, and having 512MB in Quad SLI is almost a crime. Adding more memory could have made Quad SLI the top performing setup in the NVIDIA product family.

What's Next?

I plan to continue benchmarking new games on the 9800GX2. I will add new scores here, and to the article on Widescreen and Surround performance. I've finally been able to get Vista loaded. I had to make some minor configuration changes to my rig (including going from 4GB to 2GB of RAM). I did all of this after this review, so I'll have to start over in some DX9 vs. DX10 benchmarks.